Monte Carlo Tree Search is a machine learning method used to efficiently explore very large state spaces. The method was famously used by Alpha Go and Alpha Go Zero to beat the best human Go players. This article describes a few of my insights into the method after using it to train a deep neural network to play the game of Hex.

Monte Carlo Tree Search (MCTS) is comparable to traditional tree-search methods such as minimax, but differs in a few ways. A key difference is the way MCTS uses environment rollouts instead of heuristics or complete game trees to evaluate nodes. This means that we do not need to incorporate expert knowledge about any particular problem into the system. For games such as Hex and Go, formulating expert heuristics are akin to solving the problem itself, and these games are therefore difficult to solve using traditional methods.

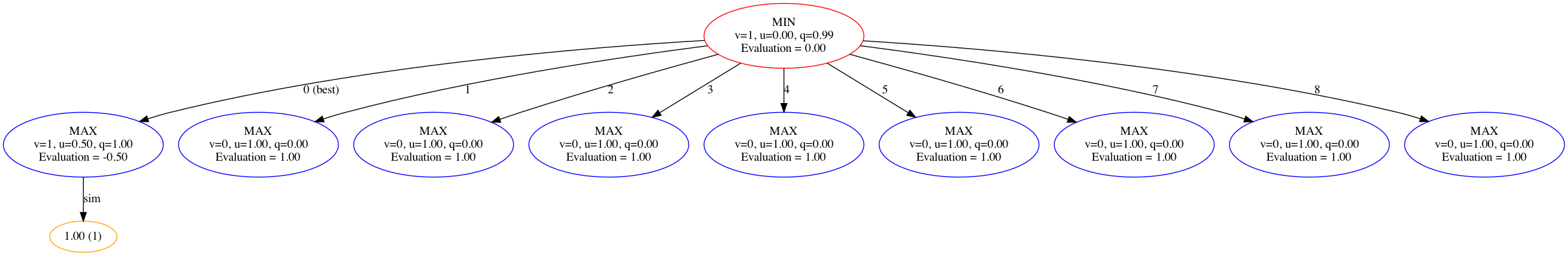

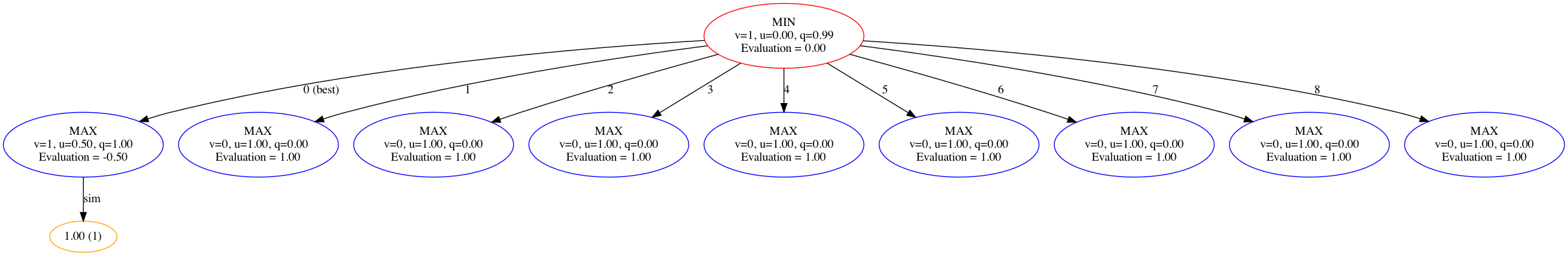

Monte Carlo Tree Visualization

MCTS also uses a tree policy (typically based on the upper confidence bound) to explore the tree. When combined with deep learning for function approximation this provides intelligent exploration of the environment implicitly, which would otherwise have to be managed though strategies such as epsilon-greedy agents.

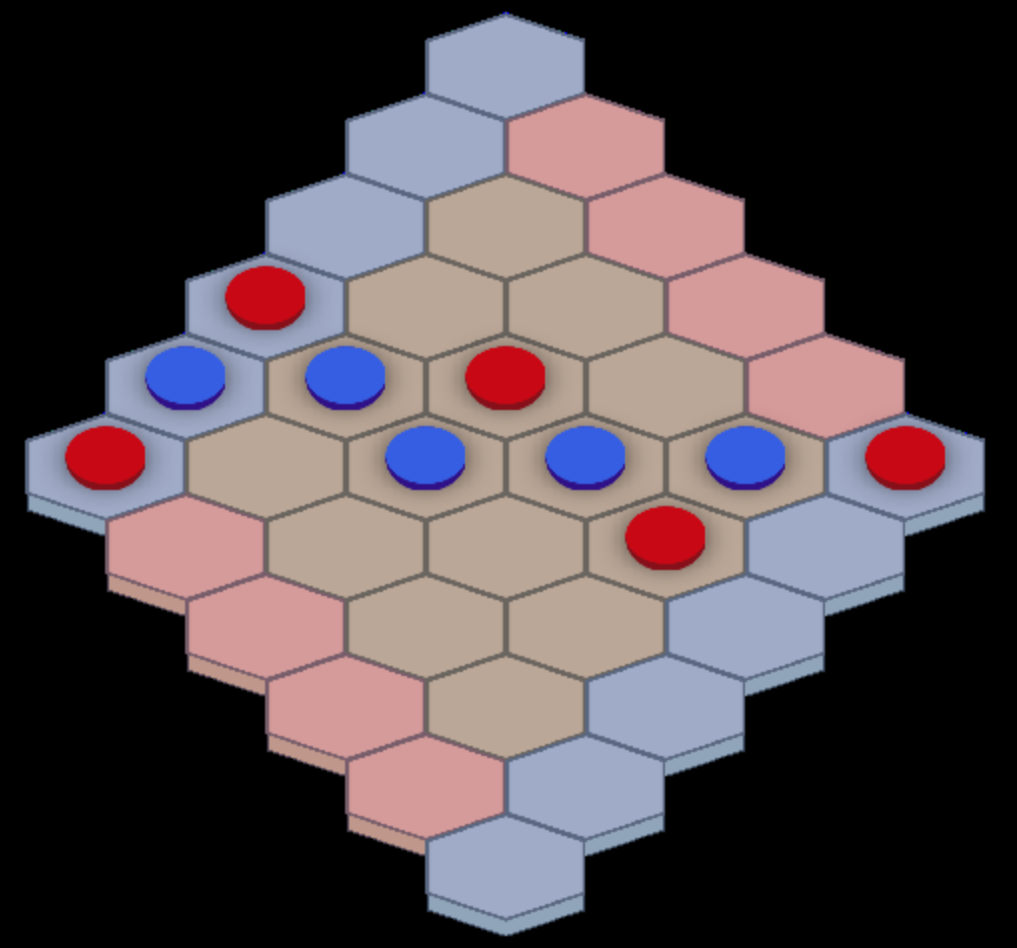

Although the state representation is not strictly a feature of MCTS, it still requires consideration to enable the reinforcement learning system to perform well. In my initial tests I used a flattened grid representation where 0s indicated an empty tile, and 1s and 2s indicated pieces placed by player 1 and player 2 respectively. This representation performed very poorly. Due to the way neural networks separate features it is very difficult to learn the (significant) difference between your and your opponents pieces when their value representations are both greater than zero. Using values of -1 and 1 instead improved the learning capacity of the system significantly.

My agent playing the game of Hex

My best results were achieved using a 3-dimensional image representation, where each channel represented a single player. Intuitively, this seemed to increase the ability of the network to understand the difference between each player piece at the same location. Since the game of hex is symmetrical we can also transpose the representation between each move, such that the network only needs to learn to play as one of the two players. This effectively reduces the state space by a factor of two.

When the tree search process reaches a node, the state is branched to generate children nodes for which exploration statistics are recorded. One hyperparameter we can use is the minimum number of visits to a node before it is expanded in this way. Given a minimum visit count of zero, downstream exploration of a parent node A will use the tree policy exclusively. This policy is highly explorative, but will over time converge towards the true distribution for its child nodes, which will be reflected in the statistics of A. In real training, however, we cannot realistically explore every child node enough to find accurate metrics for each one, especially because they will keep branching as well. Given N children and N visits to A, the estimate for A will be an average of the (one-shot) estimate of every possible move from A. This means that it will not consider the behaviour policy at all. After significant training the behaviour policy may already have found that a particular move from A is best, and we end up losing this information during exploration.

By incorporating a minimum visit count we bias the estimate by incorporating knowledge from the current behaviour policy. Given 100 visits to A and a minimum visit count of 10 we get 10% of our estimate directly from the knowledge we already have, which may increase the rate of convergence significantly. My system achieved good results using this method given enough search time. Intuitively, a minimum visit count as a function of the current training episode could perform even better. This solution would be equivalent to trusting the behaviour policy more over time, which could reduce oscillation and improve convergence properties late in training.

My final takeaway from this project is that great performance is achieved through large amounts of computing power. For complicated games with dozens of possible actions, the difference between 100 and 1000 searches for each move is very significant. In order to find good estimates for the training targets, a few visits per child node is simply not enough.

My training targets are based on the number of visits to each child node, such that the target probability of each action is simply its fraction of total visits. I was initially sceptical about this solution, because N is a function of the highly explorative UCB tree policy. This policy does, however, converge towards a true estimate as the number of searches increase. Using the estimated value (win probability) instead may seem like a good idea, but provides way too much variance unless the number of searches is extremely high. The visit count target may perhaps lose some information, but provides a significantly more stable target for training. This ensures convergence towards the ground truth over time, even if the search time is comparatively low.

Thanks for reading. This project was completed as part of one of my artificial intelligence courses at NTNU, and I'm therefore unable to share the code implementation at this time. Hope you found some of my insights useful!